Second Monitor not detected in Windows 11/10

Before you begin, make sure that your Windows has all the latest Windows Updates installed, your second monitor hardware is working, and you have properly set up the dual monitors. It is one of the common problems with all external monitors and happens even when you set up a third monitor. You will need a clear understanding of the technical parts of Windows and an administrator account.

1] Restart your Windows PC

Restarting a Windows PC can solve so many problems that most of us will spend hours troubleshooting it. While it’s know and common, if you haven’t, restart your computer. Also, make sure you don’t have basic issues such as unplugged wire, disconnected display wires, and so on.

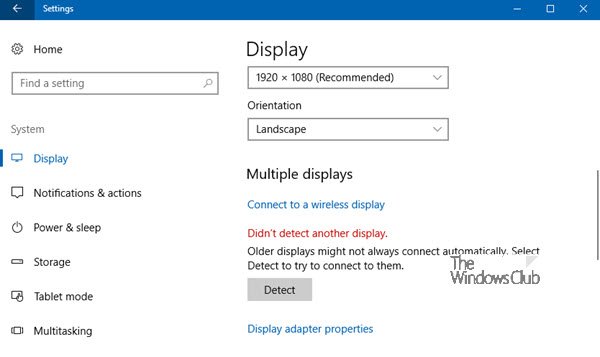

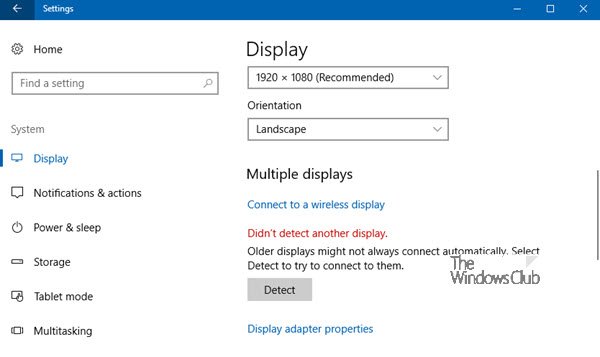

2] Force Windows to Detect the Second PC Monitor

If your operating system cannot detect the other monitor, right-click on Start, Select Run, and type desk.cpl in the Run box and hit Enter to open the Display Settings. Usually, the second monitor should be detected automatically, but if not, you can try detecting it manually. Also, make sure that the bar directly below is set to Extend these displays. If it is anything else like Show only on 1 or Show only on 2, then that is your problem. These options will disable one of the external displays. If this also doesn’t work, Go to System > Display > Advanced display, and select your monitor. Then check if the option—Remove display from desktop, is toggled on. If yes, then you turn in on. Your monitor should now be available.

3] Check Your Display Adapter

You can manually install or update the drivers used by Display Adapter. However, to do that, you will need to check the OEM. Open Device manager using WIn + X, followed by press the M key. Locate the Display adapters listing and expand it. Depending on the number of GPUs onboard, you may see one or two such adapters. To identify the OEM, look at the name. It would usually be Intel or NVIDIA. If you need to update the existing device driver, you must right-click on it and click on properties to open the Display Adapter properties. Then switch to the Driver tab. Check the version of the driver. The next step is to visit the OEM website and download the driver updates. You can then manually choose to update the graphics card driver.

4] Update, Reinstall, Or Roll Back The Graphics Driver

More often than not, the inability of Windows to find the second monitor has to do with the video card. It can’t detect the second display because the video card or graphics card does not have the correct drivers of the latest drivers installed. So you need to update the display drivers for—Display adapters and Monitors. You may need to update or reinstall your NVIDIA driver. Check out our detailed guide on how to update the device drivers. If updating the drivers doesn’t work, you can re-install them:

Lastly, if the issue occurred after installing a new driver, you can choose to roll back the driver—Right-click on the Display Adapters in Device Manager and select properties. Then switch to the Driver tab, and click on the Roll Back Driver button. Windows will uninstall the current driver and use the old driver, which is kept as a backup for a couple of days.

5] Hardware troubleshooting

Try changing the HDMI cable connecting the second monitor. If it works, we know the previous cable was faulty.Try using the second monitor with a different system. It would help isolate whether the issue is with the monitor or the primary system.Run the Hardware & Devices Troubleshooter and see.

6] Connect a Wireless Display

Wireless Display is a great option to extend your display and can be used as a temporary solution if the monitor is not working. Meanwhile, you can connect to another PC and check if the display works on that PC.

Why won’t my monitor recognize HDMI?

If an HDMI cable is connected, but your PC can still not recognize your monitor, it is likely a hardware issue. You can check on the problem using another HDMI cable, an alternate display using the same cable, or the set on another monitor. If the wire and monitor are working with another computer, then it’s GPU or Motherboard Port problem. You may have to replace or get in touch with the service center to fix it.

Do I need to connect to GPU HDMI or Motherboard HDMI?

Most of the CPUs have onboard GPU, which works through motherboard HDMI. If you have an external GPU or a graphics card, you need to connect to a GPU HDMI port. An external GPU will take over the onboard GPU. So you need to check which HDMI port your monitor is connected to and make sure it is the GPU port. Some CPUs don’t have onboard GPU, but users get confused because the motherboard offers a GPU Port. In this case, you also need to connect the HDMI wire to the GPU port instead of the motherboard display port.

Do I need to enable HDMI output on the motherboard?

It happens rarely, but if the UEFI or BIOS offers the option to enable and disable the HDMI port, it should be enabled. You will need to boot into the UEFI by pressing the F2 or Del key and changing the setting under the display section when the computer starts. Most of the time, Windows easily detects external displays or monitors without a problem. However, at times the settings in Windows or the drivers can ruin the experience. I hope the post was easy to follow, and you could finally detect the second monitor. Let us know if you have any other ideas.